Hello everyone,

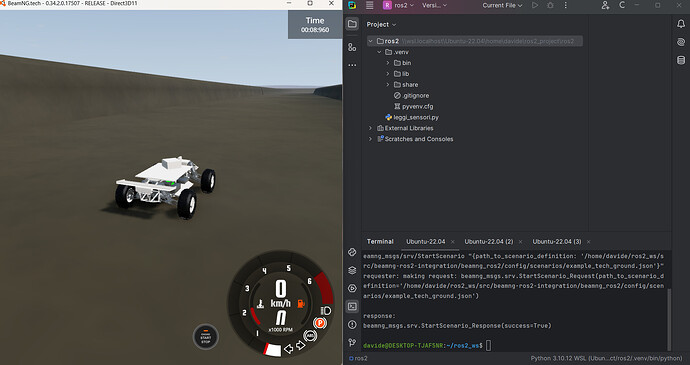

We are currently working on vehicle control in BeamNG.tech using ROS2. Our setup consists of a single ROS2 node that subscribes to various vehicle sensors (IMU, ultrasonic, time) and applies control using a PID-based approach.

1. At the moment, we are sending commands directly to the vehicle through the game client, as shown in the following part of our python script:

class VehicleControlNode(Node):

def __init__(self):

super().__init__(NODE_NAME)

host = self.declare_parameter("host", "192.168.1.217").value

port = self.declare_parameter("port", 25252).value

vehicle_id = self.declare_parameter("vehicle_id", "ego").value

if not vehicle_id:

self.get_logger().fatal("No Vehicle ID given, shutting down node.")

sys.exit(1)

self.game_client = bngpy.BeamNGpy(host, port)

try:

self.game_client.open(listen_ip="*", launch=False, deploy=False)

self.get_logger().info("Successfully connected to BeamNG.tech.")

except TimeoutError:

self.get_logger().error("Could not establish game connection, check whether BeamNG.tech is running.")

sys.exit(1)

current_vehicles = self.game_client.get_current_vehicles()

assert vehicle_id in current_vehicles.keys(), f"No vehicle with id {vehicle_id} exists"

self.vehicle_client = current_vehicles[vehicle_id]

try:

self.vehicle_client.connect(self.game_client)

self.get_logger().info(f"Successfully connected to vehicle client with id {self.vehicle_client.vid}")

except TimeoutError:

self.get_logger().fatal("Could not establish vehicle connection, system exit.")

sys.exit(1)

self.subscription = self.create_subscription(VehicleControl, "/control", self.send_control_signal, 10)

# Sensor Subscriptions

self.create_subscription(Imu, 'vehicles/ego/sensors/imu0', self.imu_listener_callback, 10)

self.create_subscription(TimeSensor, 'vehicles/ego/sensors/time0', self.time_listener_callback, 10)

self.create_subscription(Range, 'vehicles/ego/sensors/ultrasonic_left', self.left_listener_callback, 10)

self.create_subscription(Range, 'vehicles/ego/sensors/ultrasonic_right', self.right_listener_callback, 10)

self.create_subscription(Range, 'vehicles/ego/sensors/ultrasonic_front', self.front_listener_callback, 10)

Instead of this approach, which is working quite well in our scenario, we were unable to use the method you previously suggested (using /vehicle/cmd for throttle and steering, beamng_agent, and beamng_teleop_control).

Would it be necessary to create an additional node to convert Twist messages into BeamNG control commands? Our goal is to have our node publish commands in the correct format.

2. Additionally, we have encountered the following error in the briidge terminal, but it does not seem to affect functionality. We suspect it might be related to the sensor orientation when not in its default position:

[ERROR] [1740049677.547881833] [vehicles.ego]: Fatal error [ego]: Rotation.as_quat() takes no arguments (1 given)

Do you have any insights on what might be causing this?

3. Finally, we have a question regarding vehicle colors in a scenario. Currently, our test scenario defines the vehicle color like this:

"name": "ego",

"model": "Desert_Buggy_Light",

"color": "white"

However, in the info_Desert_Buggy.json file, the vehicle has the following configuration:

"Configuration": "Desert Buggy",

"Drivetrain": "4WD",

"defaultPaintName1": "Black",

"defaultPaintName2": "Red"

where defaultPaintName1 and defaultPaintName2 define the primary and secondary colors, which are associated with specific materials as described in the Vehicle Tutorial of BeamNG (e.g., chassis and rims).

How can we make the scenario use this predefined color combination instead of setting a single "color" value?

Any example or clarification would be greatly appreciated!

Thanks in advance for your help, have a good day!